Engineering and music at the Frontiers of Engineering

October 4, 2010A couple of weeks ago, I was fortunate to be able to attend the National Academy of Engineering’s Frontiers of Engineering symposium on behalf of my day job. One of the sessions focused on Engineering and Music, organized by Daniel Ellis of Columbia University and Youngmoo Kim of Drexel University, and some of my notes are below. (Links in the titles go to PDFs of short papers by each speaker.)

Brian Whitman, The Echo Nest: Very Large-Scale Music Understanding

What does it mean to “teach computers to listen to music“? Whitman, co-founder and CTO of The Echo Nest, talked about the path to founding the company as well as some of its guiding principles. Whitman discussed the company’s approach to learning about music, which mixes acoustic analysis of the music itself with information gleaned by applying natural language processing techniques to what people are writing on the Internet about the songs, artists or albums. He shared their three precepts: “Know everything about music and listeners. Give (and sell) great data to everyone. Do it automatically, with no bias, on everything.” Finally, he ended on a carefully optimistic note: “Be cautious what you believe a computer can do…but data is the future of music.” Earlier this year, Whitman gave a related but longer talk at the Music and Bits conference, which you can watch here. [A disclosure: Regular readers of z=z will be aware that The Echo Nest is a friend of the blog.]

Douglas Repetto, Columbia University: Doing It Wrong

I felt a little for Repetto, who presented a short primer on experimental music for an audience of not-very-sympathetic engineers. He started with Alvin Lucier‘s well-known piece, “I am sitting in a room” and then played a homage made by one of his students, Stina Hasse. In Lucier’s original, he iteratively re-records himself speaking, until eventually the resonance takes over and only the rhythms of his speech are discernible (more info). For Hasse’s take, she did the re-recording in an anechoic chamber; the absence of echo damped her voice and her words evolved into staticky sibilant chirps, probably as a result of the digital recording technology. Repetto presented several other works of music, making the case to the audience that there was a commonality of experimental mindset: for both the artists whose work he was presenting and the researchers in the room, the basic strategy was to interrogate the world and see what you find out. Creativity, he argued, stems from a “let’s see what happens” attitude: “creative acts require deviations from the norm, and that creative progress is born not of optimization, but of variance.”

Daniel Trueman, Princeton University: Digital Instrument Building and the Laptop Orchestra

Trueman, a professor of music, started off by talking about traditional acoustic instruments and the ‘fetishism’ of mechanics. Instruments are not, he stressed, neutral tools for expression: the physical constraints and connections of instruments shapes how musicians think, the kind of music they play, and how they express themselves (for example, since many artists compose on the piano, the peculiarities of the instrument colour the music they create). But in digital instruments, there is nothing connecting the body to the sound; as Trueman put it, “It has to be invented. This is both terrifying and exhilarating.” Typically, the user performs some actions, which are transduced by sensors of some sort, and then converted into sound. But the mapping between the sensor inputs and the resultant audible output is pretty much under the control of the creator. Trueman presented some examples of novel instruments and techniques for this mapping, as well as some challenges and opportunities: for example, the physical interfaces of digital instruments tend to be a little ‘impoverished’ (consider how responsive an electric guitar is, for example), but these instruments can also communicate wirelessly with each other, for which there is no acoustic analog.

Elaine Chew, University of Southern California: Demystifying Music and its Performance

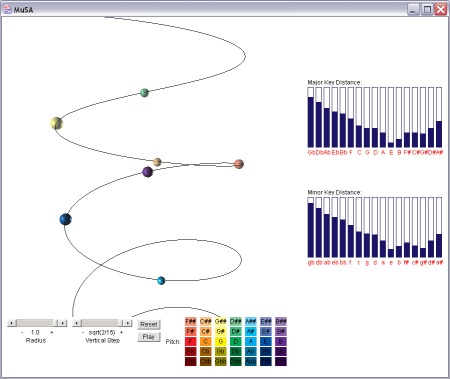

Chew, with a background in both music and operations research, presented a number of her projects which use visualization and interaction to engage non-musicians with music. The MuSA.RT project visualizes music in real-time on a spiral model of tonality (see image above), and she demonstrated it for us by playing a piece by the spoof composer PDQ Bach on a keyboard and showing us how its musical humour derived in part from ‘unexpected’ jumps in the notes, which were clearly visible. She also showed us her Expression Synthesis Project, which uses an interactive driving metaphor to demonstrate musical expressiveness. The participant sits at what looks like a driving video game, with an accelerator, brake, steering wheel and a first-person view of the road. The twist is that the speed of the car controls the tempo of the music: straightaways encourage higher speed and therefore a faster tempo, and tight curves slow the driver down. As well as giving non-musicians a chance to ‘play’ music expressively, the road map is itself an interesting visualization of the different tempi in a piece.

Some quotes from the panel discussion:

Repetto on trying to build physicality/viscerality into digital instruments: “Animals understand that when you hit something harder, it’s louder. But when you hit your computer harder, it stops working.”

Trueman on muscle memory: “You can build a typing instrument that leverages your typing skills to make meaningful music.”

Chew on Rock Band and other music games: “It’s not very expressive: the timing is fixed, with no room for expression. You have to hit the target—you don’t get to manipulate the music.” More generally, the panelists agreed that the democratization of the music experience and communal music experiences were a social good, regardless of the means.

Things I never expected to write on this blog: I am grateful to the National Academy of Engineering, IBM, and Olin College for sponsoring this post, however inadvertently.

Leave a comment